Indie Dev Log #5 — Ops 🛠️: When AIs Talk to Each Other

Closing builder knowledge gaps with multi-model and multi-agent collaboration

When I started building products, I hit a wall pretty quickly. UX design? No clue where to start. Performance optimization? Hours of googling just to understand the problem. Every new technical challenge meant diving into tutorials, forums, and documentation while my actual product sat waiting.

That was before I discovered something that completely changed my development workflow: letting different AI models have discussions with each other and conduct research autonomously.

The Universal Problem

This problem is universal when building products. You wear multiple hats—developer, designer, marketer, sometimes even customer support. Each role comes with knowledge gaps that feel impossible to fill quickly.

I've faced this in two main scenarios that probably sound familiar:

Case 1: Starting a new feature in an unfamiliar domain. I needed to add UX design to my app but didn't even know what basic UX principles existed, let alone how to apply them.

Case 2: Getting stuck on a technical problem where my usual solutions weren't working. I'd already tried several approaches that seemed reasonable, but nothing solved the issue.

The traditional approach meant stepping away from coding to become a student again—learning the domain from scratch before I could even ask the right questions.

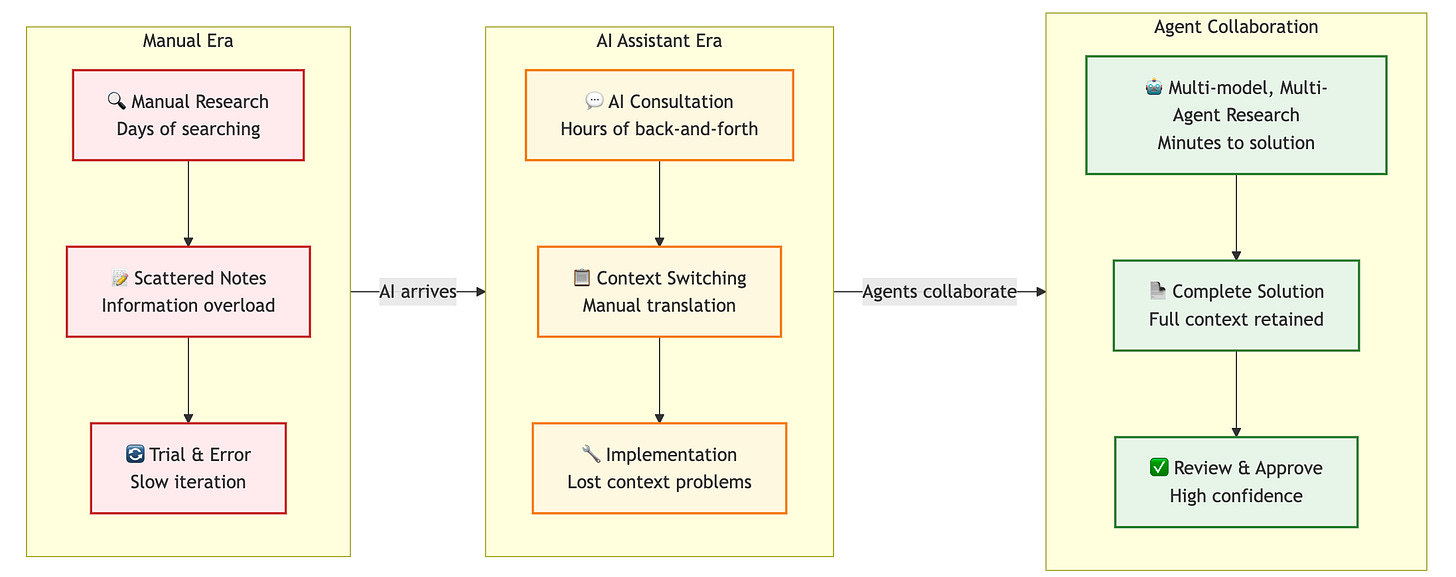

Stage 1: The Manual Era — Google, Forums, and Tutorial Hell

Before AI tools, building products meant mastering everything—not just coding, but also UX/UI design, marketing, and more. Even within coding, there were always unfamiliar territories. Every new challenge meant hours or days of research before even starting to build.

How It Worked in Practice

The traditional process looked like this:

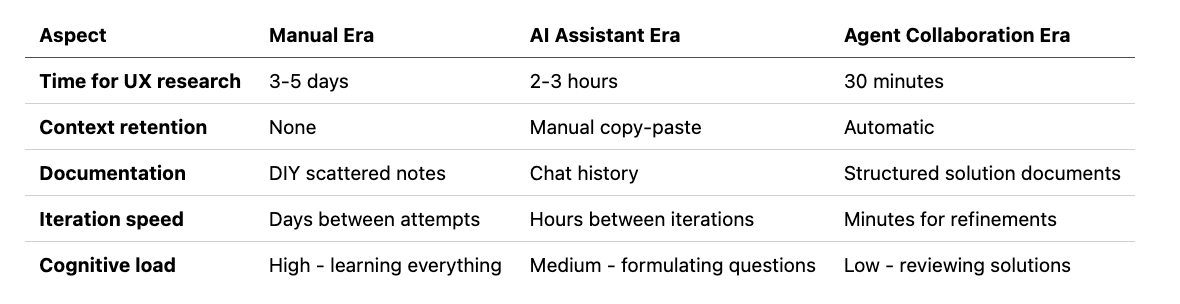

People would apply "lean learning"—find a tutorial or book to quickly understand the high-level concepts. Then spend time crafting the right search terms and questions for Google or Stack Overflow. Finally, adapt whatever generic answers were found to fit the specific problem.

The typical three-step process was:

Learn the domain basics to understand terminology and concepts

Search with the right keywords and questions

Adapt generic solutions to my specific situation

Specific Example

When building a web app without modern web development experience, the journey typically looked like this:

Days 1-2: Reading tutorials to understand what React, Vue, and Angular even were

Days 3-4: Researching technology stacks on Reddit and Hacker News

Days 5-7: Trying small projects with different frameworks

Week 2: Finally choosing a stack, only to discover new problems I hadn't anticipated

For UX design, it was even worse. Without knowing what to look for—What are design principles? What's the difference between UX and UI?—I would spend days just learning the vocabulary before even searching for solutions to actual design problems.

Why It Wasn't Enough

The real problem wasn't just the time investment. Even after all that research:

Technology choices weren't optimal because decisions were made while still learning

New problems would emerge during development, requiring more research cycles

Limited time and energy meant not all options could be explored thoroughly

Knowledge would decay—by the time one thing was learned, another was forgotten

Stage 2: The AI Assistant Era — ChatGPT as Your Consultant

I was lucky to start building when ChatGPT and Claude were already available. Everything had changed—expert consultants available 24/7. The promise was compelling: instant access to knowledge across any domain, personalized to my specific situation.

How It Worked in Practice

Instead of the manual three-step process, AI streamlined everything:

Direct questioning: Ask AI to explain concepts or recommend resources instantly

Problem description: Describe my specific situation and get tailored advice

Customized solutions: Receive answers already adapted to my exact use case

The workflow became much more conversational and iterative. I could ask follow-up questions, request clarifications, and dive deeper into specific aspects without starting new searches each time.

Specific Example

In my previous blog post, I described exactly how I use ChatGPT to design the UI:

Created a ChatGPT project with a UX expert system prompt

Shared screenshots of my current interface and asked for feedback on specific design principles

Had an extended discussion where I asked about terminology I didn't understand—"What's visual hierarchy? Why does whitespace matter?"—and shared all my concerns and constraints

Asked the AI to summarize our entire conversation into actionable UX requirements

Copy-pasted those requirements to my coding agent (Claude Code) for implementation

This process took 2-3 hours instead of 3-5 days. The AI could explain complex concepts in ways that made sense for my specific context, not generic textbook definitions.

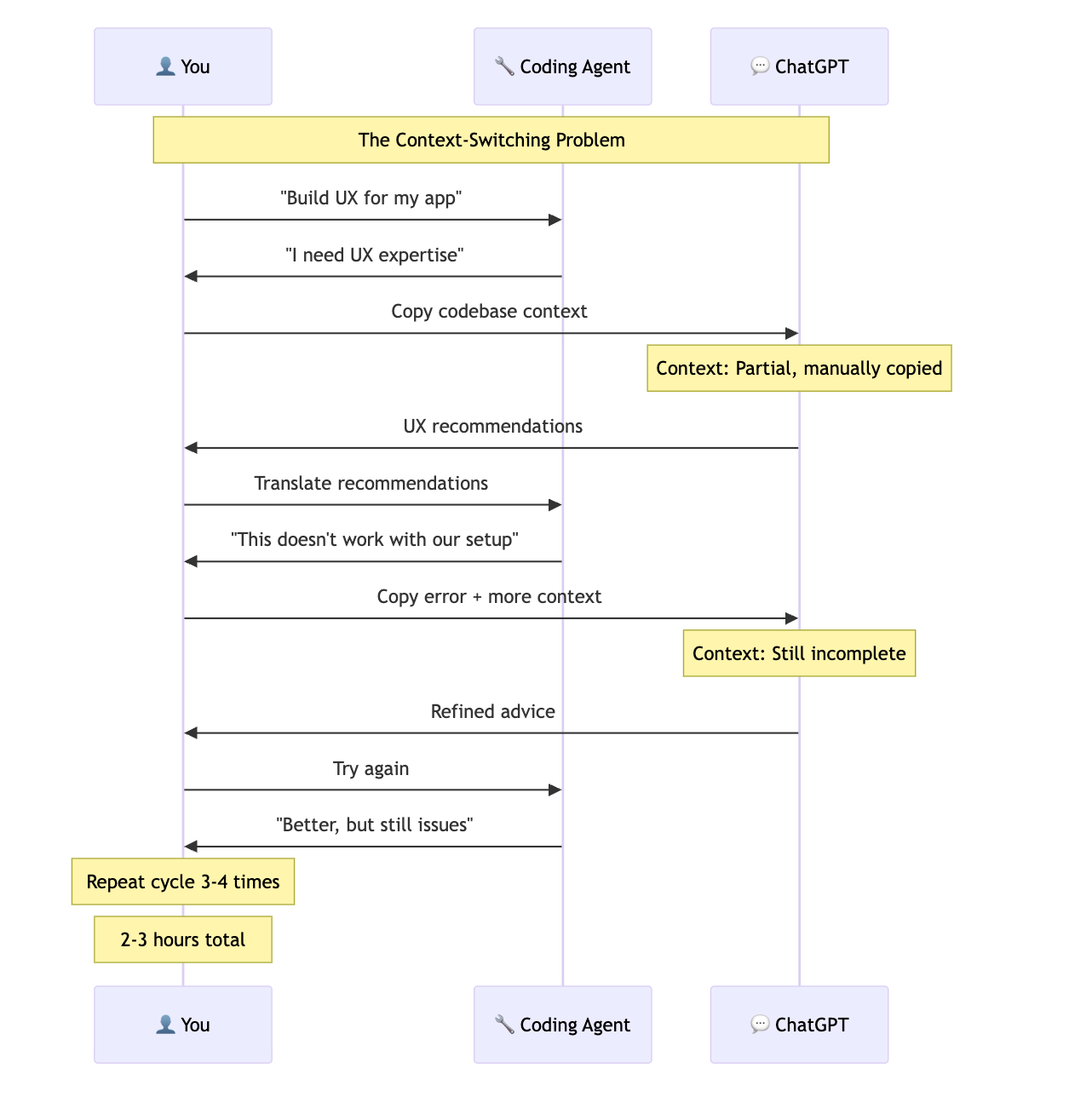

The Context-Switching Workflow

Why It Wasn't Enough

Even with AI help, I noticed two persistent issues:

Time overhead remained: When working in unfamiliar domains, I still didn't know how to ask the right questions initially. I'd spend significant time in back-and-forth discussions before reaching useful conclusions. It was faster than manual research, but still interrupted my flow.

Context loss was painful: Every time I switched between Claude Code and ChatGPT, I lost context. I'd have to re-explain my codebase situation, copy-paste code snippets, and translate between different conversations. The solutions didn't always work on the first try because the AI giving advice couldn't see my actual implementation.

I realized the ideal solution would be letting my coding agent directly communicate with research specialists, eliminating the context switching and manual translation work.

Stage 3: The Agent Collaboration Era — When AI Agents Research for Each Other

The context-switching problem became unbearable. I was spending too much time being a translator between my coding agent and research AI, losing important details in the process. I thought: "Why can't my coding agent just talk directly to the research experts?"

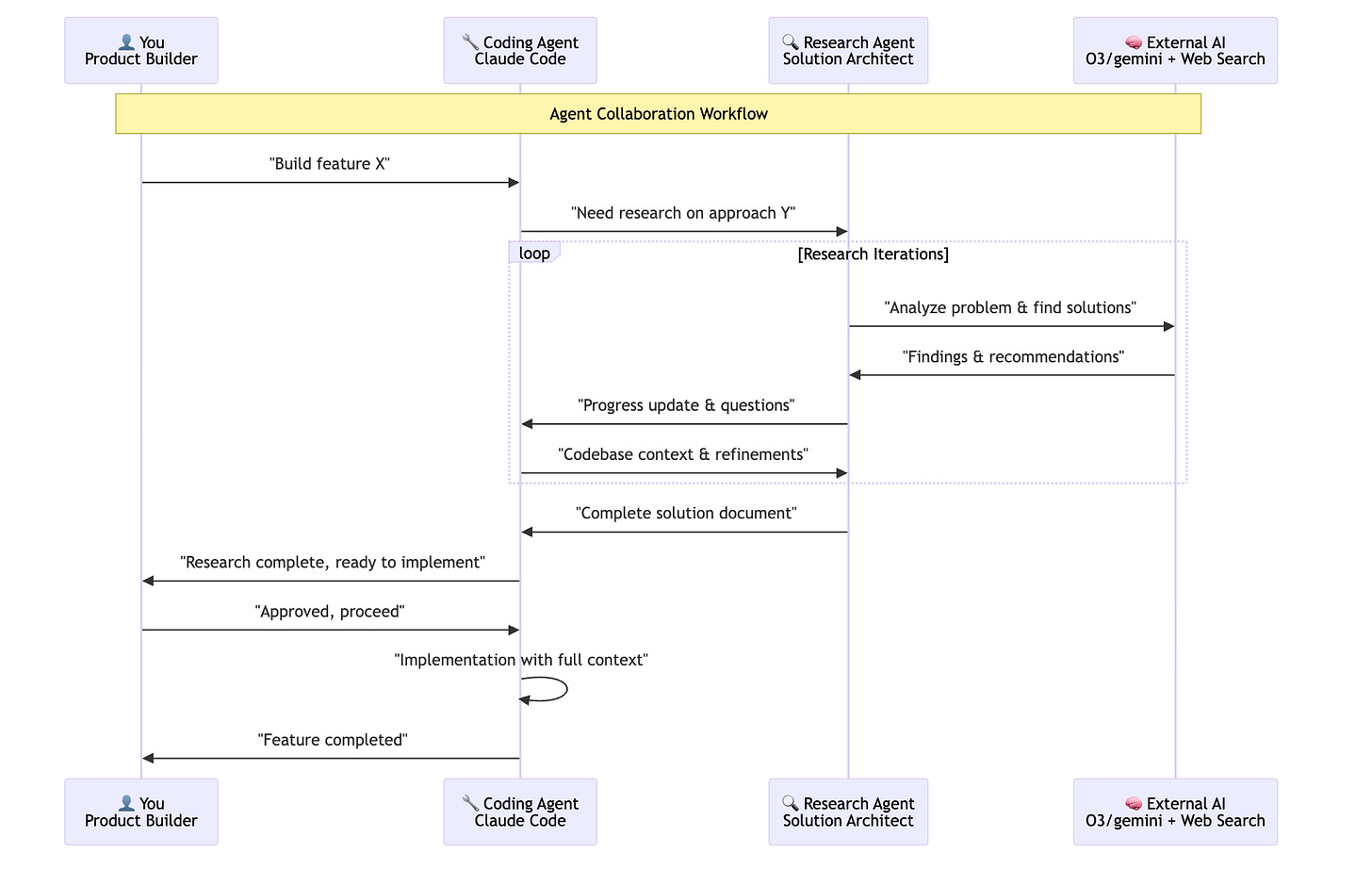

How It Works in Practice

Zen MCP

The breakthrough came when I discovered zen MCP, a tool that lets AI agents call other AI models directly.

Zen MCP enables direct AI-to-AI communication across different platforms. My Claude Code agent can directly consult with OpenAI's models (like GPT-4 or O3), Google's Gemini, and other AI systems without me having to manually facilitate the conversation. This means I can leverage the strengths of different AI models—perhaps using O3 for deep reasoning, Gemini for certain types of analysis, or specialized models for domain-specific expertise—all within a single automated workflow.

Research Agent

But zen MCP alone wasn't enough—I needed a specialized research agent. So I built a "research-solution-architect" agent in Claude Code with two main capabilities: web search and zen MCP integration.

I've open-sourced the full prompt I use for this agent here

The key design principles that make this agent effective:

Role specialization: It's positioned as a Senior Research Solution Architect, giving it the right mindset for complex problem-solving rather than just quick fixes.

Multi-source research: The agent combines multiple research approaches—examining the actual codebase, conducting web searches for current best practices, and engaging in detailed discussions with the O3 model to validate ideas and explore alternatives.

Structured documentation: Instead of just providing an answer, it produces comprehensive documents with clear sections: Executive Summary, Problem Analysis, Research Findings, Implementation Plan, and Technical Details. This ensures nothing gets lost in translation.

Audit trail: The agent documents not just the final solution but the entire reasoning process, including alternative approaches that were considered and rejected. This transparency lets me understand why certain decisions were made.

Now my complete setup works like this:

My coding agent encounters a problem or I ask it to research something

It calls the research specialist with full context about the codebase and problem

They have a discussion—the coding agent can ask the right technical questions immediately

A solution document is produced with the complete reasoning and recommendations

I review and decide whether to implement the proposed solution

The result is that unclear problems get transformed into crystal-clear, actionable solution documents that can be confidently implemented.

How Agent Collaboration Works

Why This Actually Works Better

This approach delivers dramatic improvements across every dimension:

The key improvements:

Context retention and multiplexing: The coding agent keeps the entire codebase state in memory while a separate research agent explores unknown territory. Since the coding agent has full access to the codebase, it can directly share complete context with the research agent—including relevant code snippets, file structures, dependencies, and implementation details that would be impossible to manually copy-paste. This eliminates both repeated prompt priming and context loss from incomplete information transfer.

Focus shift to verification and decision-making: I'm not spending time gathering data anymore. Instead, I'm evaluating trade-offs, making strategic decisions, writing tests, and shipping features. The agents handle the time-consuming research work while I maintain decision-making control.

Higher ambition ceiling: I can now tackle projects that previously would have required a small team. The research and learning overhead has been dramatically reduced while actually improving the quality of solutions through multi-model collaboration.

This isn't about blindly accepting AI recommendations—it's about letting AI handle the research grunt work while maintaining full transparency and auditability through comprehensive documentation.

Part of my Indie Dev Log series where I share practical lessons from building products.